Big Data

INTRODUCTION:-

- When there was no Internet exist in the World, that time the King was those peoples who have the powers. But in today’s world those peoples who have the Data are the King. Because now a days “DATA IS EVERYTHING”.

- In earlier world, everything was working fine because there were nothing like Internet exist, that’s why there were no users exist to use the GOOGLE, FB etc. But nowadays the total number of internet users around the world grew by 346 MILLION in the past 12 Months and almost 9,50,000 new users coming up each day. Almost 4.57 billion Internet users are there in the world.

- Can you imagine, globally, internet user numbers are growing at an annual rate of more than 8 percent, and year-on-year growth is much higher in many developing economies.

· Then the questions arises that how these huge data or say the Big Data manages, store etc.

What is Big Data?

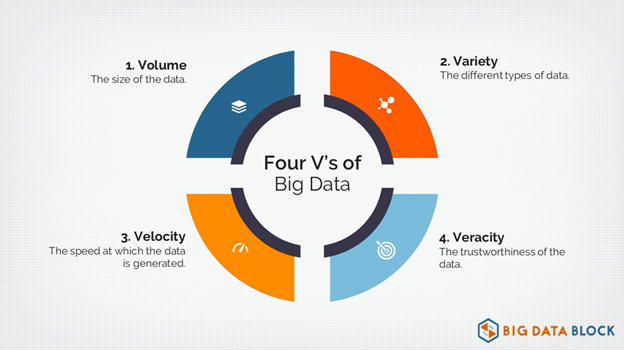

The term “big data” refers to data that is so large, fast or complex that it’s difficult or impossible to process using traditional methods. The act of accessing and storing large amounts of information for analytics has been around a long time. But the concept of big data gained momentum in the early 2000s when industry analyst Doug Laney articulated the now-mainstream definition of big data as the four V’s:

VOLUME

Volume is the V most associated with big data because, well, volume can be big. What we’re talking about here is quantities of data that reach almost incomprehensible proportions.

Facebook, for example, stores photographs. That statement doesn’t begin to boggle the mind until you start to realize that Facebook has more users than China has people. Each of those users has stored a whole lot of photographs. Facebook is storing roughly 250 billion images.

Can you imagine? Seriously. Go ahead. Try to wrap your head around 250 billion images. Try this one. As far back as 2016, Facebook had 2.5 trillion posts. Seriously, that’s a number so big it’s pretty much impossible to picture.

So, in the world of big data, when we start talking about volume, we’re talking about insanely large amounts of data. As we move forward, we’re going to have more and more huge collections. For example, as we add connected sensors to pretty much everything, all that telemetry data will add up.

How much will it add up? Consider this. Gartner, Cisco, and Intel estimate there will be between 20 and 200 (no, they don’t agree, surprise!) connected IoT devices, the number is huge no matter what. But it’s not just the quantity of devices.

Consider how much data is coming off of each one. I have a temperature sensor in my garage. Even with a one-minute level of granularity (one measurement a minute), that’s still 525,950 data points in a year, and that’s just one sensor. Let’s say you have a factory with a thousand sensors, you’re looking at half a billion data points, just for the temperature alone.

Or, consider our new world of connected apps. Everyone is carrying a smartphone. Let’s look at a simple example, a to-do list app. More and more vendors are managing app data in the cloud, so users can access their to-do lists across devices. Since many apps use a freemium model, where a free version is used as a loss-leader for a premium version, SaaS-based app vendors tend to have a lot of data to store.

Todoist, for example (the to-do manager I use) has roughly 10 million active installs, according to Android Play. That’s not counting all the installs on the Web and iOS. Each of those users has lists of items — and all that data needs to be stored. Todoist is certainly not Facebook scale, but they still store vastly more data than almost any application did even a decade ago.

Then, of course, there are all the internal enterprise collections of data, ranging from energy industry to healthcare to national security. All of these industries are generating and capturing vast amounts of data.

That’s the volume vector.

VELOCITY

Remember our Facebook example? 250 billion images may seem like a lot. But if you want your mind blown, consider this: Facebook users upload more than 900 million photos a day. A day. So that 250 billion number from last year will seem like a drop in the bucket in a few months.

Velocity is the measure of how fast the data is coming in. Facebook has to handle a tsunami of photographs every day. It has to ingest it all, process it, file it, and somehow, later, be able to retrieve it.

Here’s another example. Let’s say you’re running a marketing campaign and you want to know how the folks “out there” are feeling about your brand right now. How would you do it? One way would be to license some Twitter data from Gnip (acquired by Twitter) to grab a constant stream of tweets, and subject them to sentiment analysis.

That feed of Twitter data is often called “the firehose” because so much data (in the form of tweets) is being produced, it feels like being at the business end of a firehose.

Here’s another velocity example: packet analysis for cyber security. The Internet sends a vast amount of information across the world every second. For an enterprise IT team, a portion of that flood has to travel through firewalls into a corporate network.

Unfortunately, due to the rise in cyber attacks, cyber crime, and cyber espionage, sinister payloads can be hidden in that flow of data passing through the firewall. To prevent compromise, that flow of data has to be investigated and analyzed for anomalies, patterns of behavior that are red flags. This is getting harder as more and more data is protected using encryption. At the very same time, bad guys are hiding their malware payloads inside encrypted packets.

That flow of data is the velocity vector.

VARIETY

You may have noticed that I’ve talked about photographs, sensor data, tweets, encrypted packets, and so on. Each of these are very different from each other. This data isn’t the old rows and columns and database joins of our forefathers. It’s very different from application to application, and much of it is unstructured. That means it doesn’t easily fit into fields on a spreadsheet or a database application.

Take, for example, email messages. A legal discovery process might require sifting through thousands to millions of email messages in a collection. Not one of those messages is going to be exactly like another. Each one will consist of a sender’s email address, a destination, plus a time stamp. Each message will have human-written text and possibly attachments.

Photos and videos and audio recordings and email messages and documents and books and presentations and tweets and ECG strips are all data, but they’re generally unstructured, and incredibly varied.

All that data diversity makes up the variety vector of big data.

Why Is Big Data Important?

Why Is Big Data Important?

The importance of big data doesn’t revolve around how much data you have, but what you do with it. You can take data from any source and analyze it to find answers that enable :-

1) cost reductions

2) time reductions

3) new product development and optimized offerings

4) smart decision making.

When you combine big data with high-powered analytics, you can accomplish business-related tasks such as:

- Determining root causes of failures, issues and defects in near-real time.

- Generating coupons at the point of sale based on the customer’s buying habits.

- Recalculating entire risk portfolios in minutes.

- Detecting fraudulent behavior before it affects your organization.

Companies Gives Solution On Big Data:-

1. Amazon :-

Amazon offers a popular cloud-based platform. The big data product offered by Amazon is Elastic MapReduce based on Hadoop. Many big data analytics solutions are easily deployable using Amazon Web Services. Moreover, AWS allows fast access to IT resources. Besides, that analytics frameworks offered by Amazon are Amazon Elasticsearch Service, Amazon EMR, and Amazon Athena.

2. Microsoft :-

It has the 3 big data solutions: HDInsight, Microsoft Analytics Platform System and HDP for Windows. Furthermore, the partnership with Hortonworks has provided the HDInsight tool that is a cloud-hosted service using the Azure cluster. The Microsoft analytics Platform system works with data in Hadoop and relational databases. HDP for Windows is the big data cluster which can be configured and installed on Windows.

3. Google :-

Google’s BigQuery is the cloud-based platform for analytics to analyze quickly huge data sets. Along with that, there are Big data solutions offered by Google like Cloud Data Flow which is a programming model including batch computation, stream analytics, and ETL. Also, there is Cloud Dataproc by Google that manages Spark and Hadoop services. Cloud Datalab is another feather cap in their solution to visualize and analyze data.

4. IBM:-

There are some top-rated products launched by IBM which include InfoSphere database platform, DB2, Cognos, SPSS analytics applications and Informix. Along with these, IBM provides many Big data solutions including Hadoop System, Stream Computing, Federated navigation, etc. Additionally, it also offers the big data products like IBM BigInsights for Apache Hadoop, IBM Big Insights on Cloud and IBM Streams which are unique among the big data companies list. All these solutions and products help the organizations to capture and analyze the vast data sets in a straightforward manner effectively.

CASE STUDY OF FACEBOOK ON BIG DATA :-

The main business strategy of Facebook is to understand who their users are, by understanding their user’s behaviors, interests, and their geographic locations, facebook shows customized ads on their user’s timeline. How it is possible?

There are around billion levels of unstructured data has been generated every day, which contains images, text, video, and everything. With the help of Deep Learning Methodology ( AI), Facebook brings structure for unstructured data.

A deep learning analysis tool can learn to recognize the images which contain pizza, without actually telling how a pizza would look like?. This can be done by analyzing the context of the large images that contain pizza. By recognizing the similar images the deep learning tool will segregate the images that contain pizza. This is how data Facebook is bringing a structure to the unstructured data.

In Deep Learning There are several use cases are there

Textual Analysis:-

Facebook uses DeepText to analyze the text data and extract the exact meaning from the contextual analysis. This is semi-unsupervised learning, this tool won’t need a dictionary or and don’t want to explain the meaning of every word. Instead, it focused on how words are used.

Facial Recognition:-

The Tool used for this is DL Application, that is DeepFace which will learn itself by recognizing people’s faces in photos. That’s why we’re getting the name of the friends while tagging them in a post. This is an advanced image recognition tool because it will recognize a person who is in two different photos is the same or not.

Target Advertisements:-

Facebook uses deep neural networks to decide how to target audience while advertising ads. This Artificial intelligence can learn itself to find as much as can about the audience, and cluster them to serve them ads in a most insightful way. Because of this serving the highly targeted advertising, Facebook has become the toughest competitor for the ever known search engine Google. Likewise, Behind the Facebook business model, there are a lot of interesting data handling methodologies are there, and there are a lot of controversial things behind facebook business flow. But, we don’t want to focus on those things.

How Google Solved Big Data Problem?

This problem tickled google first due to their search engine data, which exploded with the revolution of the internet industry. And it is very hard to get any proof of it that its internet industry. They smartly resolved this difficulty using the theory of parallel processing. They designed an algorithm called MapReduce. This algorithm distributes the task into small pieces and assigns those pieces to many computers joined over the network, and assembles all the events to form the last event dataset.

Well, this looks logical when you understand that I/O is the most costly operation in data processing. Traditionally, database systems were storing data into a single machine, and when you need data, you send them some commands in the form of SQL query. These systems fetch data from the store, put it in the local memory area, process it and send it back to you. This is the real thing which you could do with limited data in control and limited processing capability.

But when you see Big Data, you cannot collect all data in a single machine. You MUST save it into multiple computers (maybe thousands of devices). And when you require to run a query, you cannot aggregate data into a single place due to high I/O cost. So what MapReduce algorithm does; it works on your query into all nodes individually where data is present, and then aggregate the final result and return to you.

It brings two significant improvements, i.e. very low I/O cost because data movement is minimal; and second less time because your job parallel ran into multiple machines into smaller data sets.

Thanks for Reading

HAPPY READING!